Use Case Examples

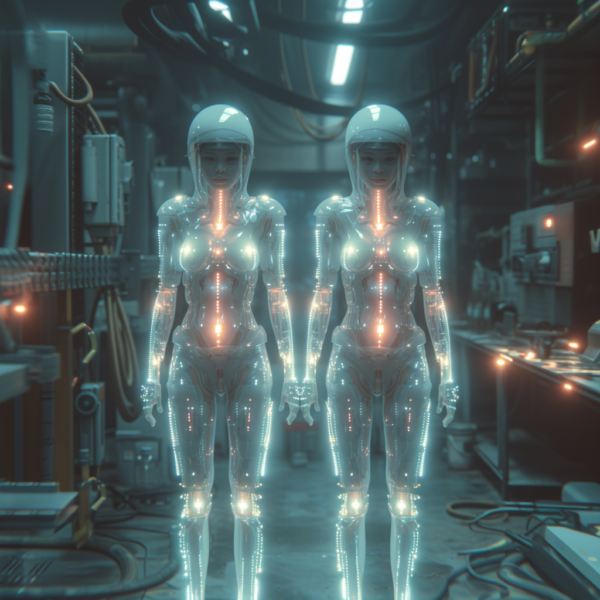

NPCs (Non Player Characters)

NPCs have long been integral to enriching the gaming world experience. However, the primary challenge with NPCs today is that they are derived from static, pre-recorded data, which limits their responses and behaviors. This often leads to the repetition of scripted lines, shattering the illusion of interacting with sentient beings and thus diminishing the immersive experience. The current solution has been to create multiple script pathways for NPCs to minimize repetition, but this approach exponentially increases the number of assets and complexity of asset creation, ultimately capping the variety of interactions.

For mobile gaming platforms, where asset size is a critical constraint, the substantial data required for pre-recorded NPC assets poses an even greater challenge. As a result, NPC interaction in mobile games is frequently simplified to basic sounds or minimal dialogue.

The advent of edge-AI, capable of operating in real-time or near real-time on the device itself, can overcome these limitations.

NPC edge-AI would leverage a variety of AI types:

- Generative emotional speech in multiple languages

- Generative non-speech audio expressions, such as shouts, cries, laughter, etc.

- Generative animations for lip-syncing, facial expressions, and body language

- Generative 3D models for varied appearances

- Generative dialogue and script

The implementation of edge-AI will significantly enhance NPCs, making them more realistic and interactive than ever before, and enable the use of advanced NPCs where it was previously not viable due to the high costs and amount of data associated with asset creation.

Player Character Cloning and Customization

A longstanding aspiration in video game development is to immerse the player fully within the game by cloning them into a character.

A cloned character will mirror the player’s appearance, behavior, speech, and emotional expressions in real life, yet be controlled by the game developers’ script.

This feature can personalize the main character, allowing players to step into the shoes of their virtual selves, or it can enhance NPCs. In multiplayer online games, this adds a new dimension to gameplay, enabling friends to embody their true selves in collaborative play.

Such technology also allows for far greater customization than what is typically available in the character creation stages of role-playing games. Utilizing generative Edge-AI, a character’s voice, emotional expressions, and other defining behaviors can be tailored to craft a truly unique persona or choose from a preset selection of traits.

Background music

Precise synchronization of background music with on-screen action has been a hallmark of good filmmaking since the early days of silent movies, where live piano players in movie theaters would adapt melodies in seconds to match the unfolding scenes. This practice evolved into modern movie soundtracks, where music scores are meticulously tailored to align with the final cut of the film, achieving second-by-second synchronization.

In video games, however, the events on the screen are directed by the player, and the game developer can only construct a framework for interaction. This dynamic makes it challenging for most games to plan music with the level of synchronization required for a fully immersive audio-visual impact. Consequently, even the best video game soundtracks today often resemble a radio, switching between channels based on the upcoming gameplay scenario, such as entering combat or a new scene.

To be blunt, modern video games have not yet consistently realized the seamless music-visual synchronization that movies have mastered for over a century. The notable exceptions—games like Rayman Legends with its music levels, Geometry Dash, and Beat Saber—have achieved this synchronization using time fixed level scrolling and have seen huge success as a result.

https://www.youtube.com/watch?v=qAnEwyJV2JY

https://www.youtube.com/watch?v=rrGXBfmbuIc

https://www.youtube.com/watch?v=wab1sBprLvs

With real-time edge-AI music generation, achieving complete music-visual synchronization in all types of video games is now a possibility, promising an unparalleled level of gaming experience.

Player interfaces

Current player interfaces typically rely on devices like hand controllers, touch screens, keyboards, and mice, which offer a limited range of data input. Likewise, feedback to players is often constrained to visuals on the screen, audio clips, and occasional vibrations from hand controllers. For conveying complex information, the prevalent method involves displaying text onscreen.

Edge-AI paves the way for innovative interface and feedback mechanisms, such as:

- Generative speech output in various languages

- Automatic speech recognition for input

- Dialogue interpretation with the player

- Player body movement detection through camera analysis

Textual feedback functions effectively for literate users, which encompasses most players. Yet, there’s an expanding niche in educational games for young children who are pre-literate or have limited reading skills. In such cases, vocal feedback is standard. Implementing edge-AI to provide spoken instructions in educational games can emulate the natural way humans teach, leading to a more effective and engaging learning experience for children.

Moreover, children can interact with the game using voice recognition, enabling a full-fledged verbal exchange between the educational game and the young learner. If a child’s verbal response is complex, edge-AI can interpret the varied expressions to understand the intended meaning, as humans often communicate the same idea in different ways.

Another significant application is the interpretation of body movements via camera feeds. The advancements in AI for motion analysis have far outstripped older technologies like Xbox Kinect. The precision and interactivity afforded by edge-AI in capturing body movements can introduce new dimensions of game control, player interaction, and potentially spawn entirely new game genres.

The commentator will also describe in detail what car you chose, but most importantly what trims and other enhancement you selected, and the benefits and drawbacks with your current trim choice.

The game will add a voice for you as the driver, so the driver can talk to other drivers or other people describing exactly where you are driving right now, how you are driving, what you are driving and the cool 20 second drifting you just did.

Voiceovers

Voiceovers are a traditional technique frequently utilized in films and broadcasting to narrate and enhance the depth of the viewing experience.

In contemporary video games, voiceovers are predominantly used as a means to convey the narrative or to guide players through tutorials.

However, with the advancement of edge-AI emotional speech technology, voiceovers can dynamically adjust their content in response to the game state, akin to a live engaging sports commentator providing detailed play-by-play analysis.

Voiceovers in this context can be considered a specialized function of a realistic, edge-AI-controlled NPC, where the focus is solely on the auditory aspect.

Storyline

The concept of an AI-generated storyline is centered around the idea of an AI composing or altering game narratives in real-time, responding to game states while adhering to a framework established by the game developers.

While the core narrative of a game will undoubtedly remain under the purview of the art director, future games could be augmented with dynamic subplots crafted by AI.

Significant research is underway to determine how to steer and guarantee the quality of AI-generated stories that meet specific criteria. Although this research is ongoing, there are strong indications that it is only a matter of time before such technology matures to the point where an AI can seamlessly write or adapt a story in a video game without deviating from essential guidelines or producing incoherent content.

Summary

Lingotion will enhance the immersion effect for most game genres. It is a true-game changer that can be used in almost any type of game and will probably result in the birth of new genres such as open dialogue-based detective games.